Kubernetes Application Deployed To Multiple Clusters

This example creates managed Kubernetes clusters using AKS, EKS, and GKE, and deploys the application on each cluster.

Deploying the App

To deploy your infrastructure, follow the below steps.

Prerequisites

- Install Pulumi

- Install Node.js

- (Optional) Configure AWS Credentials

- (Optional) Configure Azure Credentials

- (Optional) Configure GCP Credentials

- (Optional) Configure local access to a Kubernetes cluster

Steps

After cloning this repo, from this working directory, run these commands:

Install the required Node.js packages:

$ npm installCreate a new stack, which is an isolated deployment target for this example:

$ pulumi stack initSet the required configuration variables for this program:

$ pulumi config set aws:region us-west-2 # Any valid AWS region here. $ pulumi config set azure:location westus2 # Any valid Azure location here. $ pulumi config set gcp:project [your-gcp-project-here] $ pulumi config set gcp:zone us-west1-a # Any valid GCP zone here.Note that you can choose different regions here.

We recommend using

us-west-2to host your EKS cluster as other regions (notablyus-east-1) may have capacity issues that prevent EKS clusters from creating.(Optional) Disable any clusters you do not want to deploy by commenting out the corresponding lines in the

index.tsfile. All clusters are enabled by default.Bring up the stack, which will create the selected managed Kubernetes clusters, and deploy an application to each of them.

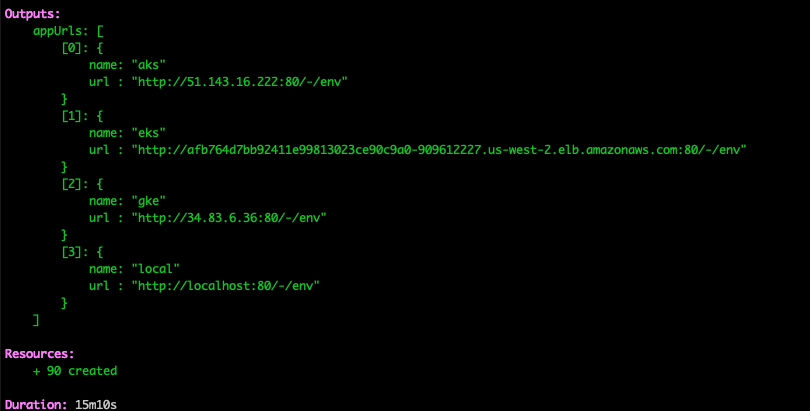

$ pulumi upHere’s what it should look like once it completes:

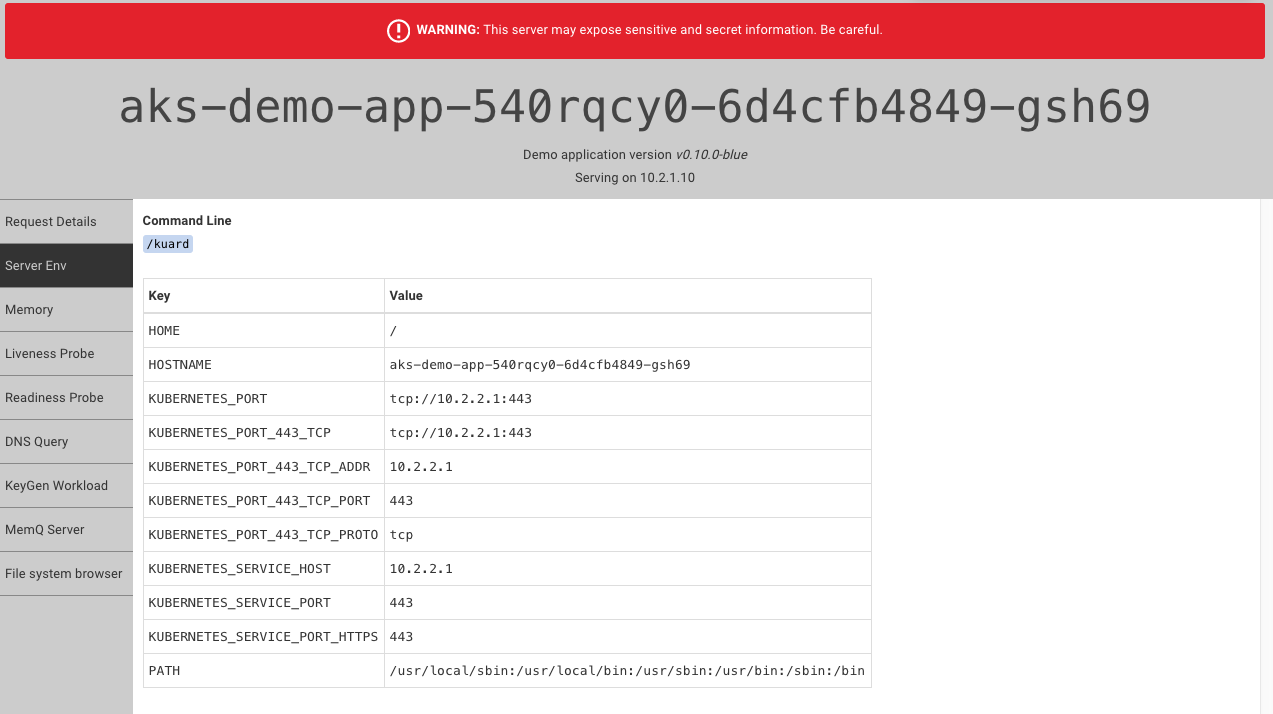

You can connect to the example app (kuard) on each cluster using the exported URLs.

Important: This application is exposed publicly over http, and can be used to view sensitive details about the node. Do not run this application on production clusters!

Once you’ve finished experimenting, tear down your stack’s resources by destroying and removing it:

$ pulumi destroy --yes $ pulumi stack rm --yesNote: The static IP workaround required for the AKS Service can cause a destroy failure if the IP has not finished detaching from the LoadBalancer. If you encounter this error, simply rerun

pulumi destroy --yes, and it should succeed.